In the age of A.I - Part 4: It kills us?

A lot of people believe that AI is a thousand times smarter than we are. When it comes to AI being aggressive, what is the first thing that comes to your mind?

Killer robots? Do robots dominate humans? The terminator?

AI, to be simple, is software that writes itself. We usually tend to think of software as stuff that we created. We wrote it, and then the machines did what we told them to do, and it would always follow our commands. This is not any longer true. The AI now is writing itself at speeds that we can hardly comprehend. AI writes its own independently, autonomously, and develops its way of thinking. Scientists know that you can’t take it apart again and figure out what it has done.

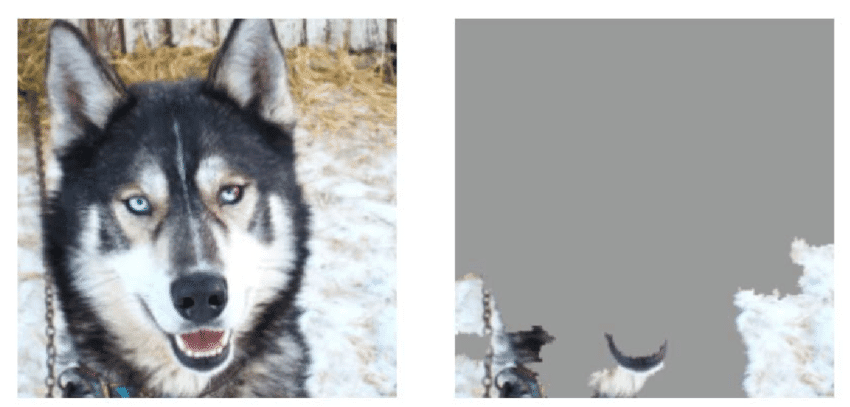

For example, this husky picture is mistaken by the AI that it’s a wolf.

When you look at it, which part of the picture do you look into to know that it’s a Husky?

Probably the eyes, the nose, the hair, the shape of the head, position of the ears, etc.

Guess which part of the picture that the AI looks at? It’s the snow in the background.

There was bias in the data set that was fed to this algorithm. Most of the pictures of wolves were in snow, so the AI conflated snow as the presence of wolves. The scary thing about this is that the scientists had no idea this was happening.

What does this affect the world?

Right now, many people are getting information from an AI system, and they trust those. The Compas Criminal Sentencing algorithm is used in 13 states of the U.S to determine criminal recidivism. ProPublica found that if you are African-American, Compas was 77% more likely to qualify you as a potentially violent offender than if you are a Caucasian. This is a natural system used in the real world to judge real people as the same as the surveillance capitalism system in China.

In the eight seconds, the algorithm has assessed 5000 unique features from all of your data. There are exceptional features like how confident you are when you type your loan or a very unexpected feature like the phone battery mode. A person who always keeps the phone battery low has a higher chance of defaulting on debt than others.

But because so many calculations happen within a short period and supercomputers hold them on their own, we don’t know what is happening inside those systems. What if, one day, the AI system evaluates that you are a criminal and you are not allowed to make a loan?

AI systems have become so popular that now military agencies are starting to take an interest in them.

We are making weapons with AI implemented into them. Pegasus, an X-47B owned by the Navy. It’s a drone driven by AI that can fly up to 2000 miles into enemy zones. This drone is invisible, not invisible to the radar; it’s hidden to human eyes. The bottom has an LED layer on it, and the top has cameras that film the sky and project on the bottom a live scene of the clouds up above the drone. Moreover, it can be installed with a Kill-Decision AI program. This autonomous drone can decide by itself whether or not it kills somebody. You read it right.

The question is with the development of automatic weapons. Will one day our new generation of soldiers won’t have to kill?

It’s an appealing idea that robots will fight other robots someday, and no one will get hurt. Unfortunately, if it worked like that, we could say: “Well, let’s just sit here and play a video game to decide who wins this war.” No one will retreat until they cannot bear the consequences of war. Even if the enemy kills all your robots, they will not stop until they kill all your people.

In this century, wars don’t happen only on the battlefield. They are on the internet as well. Social networks have become one of the most potent weapons. These days social networks track your behaviors and apply AI algorithms to predict users’ interests. It’s not just about what you post. It’s the way you post them. It’s not just that you make plans to see your friends, it’s whether you say: “I’ll see you later” or “I’ll see you at 5:15PM”. It’s not just that you talk about what you want to buy when you go to a supermarket. Whether you simply write them out haphazardly in a paragraph or list them as bullet points. All of these tiny signals are the behavioral surplus that has predictive value.

In 2010, Facebook had an experiment with AI predictive power. They called it “a social contagion” experiment. They wanted to see if online messaging could influence real-world behavior. The aim was to get more people to the polls in the 2010 midterm elections. They provided 61 million users an “I voted” button together with faces of friends who had voted. A subset of users received just the switch. In the end, they claimed to have nudged 340,000 people to vote. They did another experiment. Among those, by adjusting their feeds, they can make users happy or sad. Facebook was unaware of where they were until 2018, when the Facebook–Cambridge Analytica data scandal happened. This scandal concerned the obtaining of the personal data of millions of Facebook users without their permission by British consulting firm Cambridge Analytica, predominantly to be used for political advertising. The purpose was to target and manipulate voters in the 2016 presidential campaign.

“Now we know that any billionaire with enough money, who can buy the data, buy the AI, they too can commandeer the public and infect and infiltrate and upend our democracy with the same methodologies that surveillance capitalism uses every single day.” - said Shoshana Zuboff, author of The age of surveillance capitalism.

In 2016, a game of Go gave us a glimpse of the future of artificial intelligence. In this crazy explosion of AI, we have in our hands this new mighty power. It depends on whether we will go blind for this power or create a careful strategy to harness it.

The end of part 04.